This is a summary of a project I’ve been working on in my spare time over the last few weeks to get more familiar with Kubernetes in general, and Jenkins based docker build processes in particular. It’s not intended as a standalone guide, which would be prohibitively long! It builds on this blog post which I found very helpful in explaining the plumbing required to stand up a Docker based build agent. What I’ve documented here has ended up being significantly different from that original post, however, as what’s described also covers an agent which builds and deploys containers into Kubernetes.

I’m using a contrived example application in order to drive a build process triggered by polling a GitHub repo. The polling is to avoid having to make a NAT based inbound connection to my home network from GitHub, which would be necessary for a webhook. Other than the potential for build specifics, what the test app does isn’t very relevant in the context of this post but, for completeness, it’s node based and makes a call to a WordPress containerised application which has been altered to expose the WordPress API. It pulls a random post, which it then displays. (The contrivance is to introduce an API call within the cluster for service mesh injection – unrelated to this post.)

The end to end process here is a plausible example of a containerised app being deployed into a non-production environment (minus a slew of unit tests):

- Trigger a build to pull the source from GitHub.

- Do the application build (inside a container).

- Push that back to a registry – DockerHub in my case.

- Deploy it to the Kubernetes cluster.

There are a quite few different approaches possible, not to mention variants within them. I looked at 3, start with the use of a Docker build agent.

So how does it hang together? This approach uses a standalone Docker server running outside of Kubernetes; everything else is inside the cluster. It doesn’t have to be: it just happens to be the starting point for me. You need to set Jenkins up to be able to talk to the docker host as the build agent. You then need to change the default configuration of the Docker API to be able to accept connections from the Docker CLI running via a Jenkins plugin. This configuration is security-critical: the API is running as root. This setup then allows the image for the build process to be pulled by Jenkins, which is a distinct step from the application image build itself. This is all described well in the blog post I referenced at the start.

While still very helpful, the Dockerfile for the build agent provided by the author of the original blog post didn’t quite work for me: the steps to add the user were returning an error. Combining this with the differences for the Docker build and the additional functionality for the deployment phase, it makes for quite a different set of functionality:

FROM ubuntu:latest # get rid of some tz issues since bumping the ubuntu version: ENV TZ=Europe/London RUN ln -snf /usr/share/zoneinfo/$TZ /etc/localtime && echo $TZ > /etc/timezone RUN apt-get update RUN apt-get -qy upgrade RUN apt-get install -qy git RUN apt-get -qy install curl WORKDIR /tmp

# note: arm64 reference; substitute accordingly. RUN curl -LO https://storage.googleapis.com/kubernetes-release/release/$(curl -s https://storage.googleapis.com/kubernetes-release/release/stable.txt)/bin/linux/arm64/kubectl RUN mv ./kubectl /usr/local/bin

# may be unnecessary because of the mv command: RUN chmod 755 /usr/local/bin/kubectl # Install a basic SSH server (from original Dockerfile) RUN apt-get install -qy openssh-server RUN sed -i 's|session required pam_loginuid.so|session optional pam_loginuid.so|g' /etc/pam.d/sshd RUN mkdir -p /var/run/sshd RUN apt-get install -qy openjdk-8-jdk

# pull just a single binary from an image: COPY --from=docker /usr/local/bin/docker /usr/local/bin # Add user jenkins to the image RUN useradd -ms /bin/bash jenkins # Set password for the jenkins user (you may want to alter this). RUN echo 'jenkins:jenkins' | chpasswd RUN mkdir /home/jenkins/.m2 RUN mkdir /home/jenkins/.ssh RUN chown -R jenkins:jenkins /home/jenkins/.m2/ RUN chown -R jenkins:jenkins /home/jenkins/.ssh/ RUN mkdir /home/jenkins/.kube RUN chown -R jenkins:jenkins /home/jenkins/.kube EXPOSE 22 CMD ["/usr/sbin/sshd", "-D"]

Per some of the inline comments, this is ‘rough but working’ :). There are a few pieces of functionality which are worth pointing out, in the order they appear:

- git — it’s the freshly minted build agent docker container that does the git checkout, specified in the pipeline definition. I’d initially assumed that it was Jenkins that would do this (by virtue of the config in the pipeline. It’s not.)

- The install of kubectl. I failed to get the Kubernetes CD plugin to work. I couldn’t get it to play ball with any of the variants of the secret it needs, so what I ended up doing was using a reference to kubectl in the Jenkinsfile, which therefore requires it to be installed in the build container.

- The rest of the components – the sshd, jenkins, and java install – are, I think, just standard parts of the build agent. I’ve not come across any documentation that breaks down the functionality yet. Sshd and JNLI are an either / or for connection configuration for the build agent. My working assumption is this is how configuration files – like for instance how the secret for kubectl (discussed later when I cover plugins) – end up on the agent. I’ve not tried building and running the agent without Java, so it may just be a hangover from the original Dockerfile.

On the subject of the agent configuration, the build agent provided by JenkinsCI saves you having to build your own image from scratch, but there doesn’t seem to be an ARM version of it available at the time of writing. As my cluster is ARM based, that was a showstopper. It also serves as a base, so you’re probably going to have to extend it for things like docker build support anyway.

I mentioned some of the configuration steps earlier, some of which infer plugin requirements for Jenkins and, again, which are covered in the original post. In more detail:

- Installation of the Docker plugin for Jenkins.

- In the cloud configuration, a reference to your docker build agent – i.e., the host that exposes the Docker API.

- In the Docker agent template, a label that is referred to in the build-pipeline definition, and the registry which hosts the docker image pulled down by Jenkins and deployed to the agent.

The combination of these 3 allow Jenkins to find the Docker image for the build agent, and to stand it up on the nominated host running the Docker API.

For my variant, which includes the deploy to Kubernetes stage I also need the Kubernetes CLI plugin, which allows you to reference the secret in my Jenkinsfile with the directive withKubeConfig.

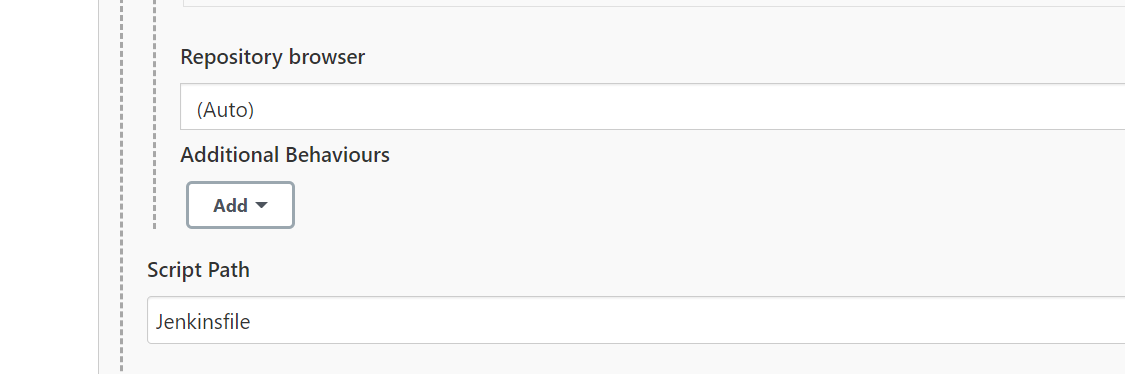

The final variation from the post is that I use a pipeline for the build and deployment, rather than a freestyle project. You then need to refer to the Jenkinsfile as part of the repo configuration:

There are undoubtedly better ways to separate out these files in the build process for industrial usage but this at least outlines a working approach.

So while this works reliably, it’s pretty complicated, and ties the container build to a specific host. I also spent quite a long time crafting the Dockerfile for the build agent. While you could scale the approach via the cloud configuration, it would be better to have everything running inside Kubernetes.

That leads me to the next approach I tried, again based on a different blog posting from the same site as above. This requires the use of the Jenkins Inbound Agent which again, at the time of writing, isn’t available for ARM, so a non-starter for my cluster.

So, what I looked at next is Kaniko. While it’s a doddle to started with it, I have hit a showstopper: a requirement for two ‘destinations’.

This repo is a copy of the same application that I’ve used with the Docker build above, but which includes an updated Jenkinsfile and the reference to the Kaniko pod spec. The pod uses a single destination parameter, which is to publish to Docker Hub. This is transparent to the configuration, and is actually driven by the use of a Kubernetes secret of type ‘docker-registry‘.

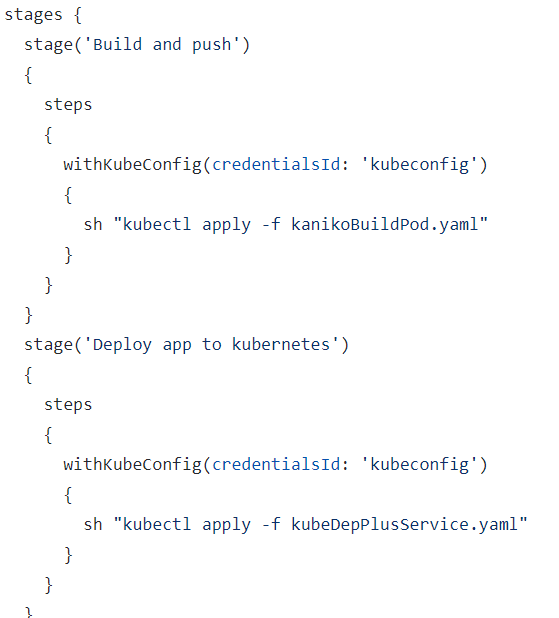

The Jenkinsfile has a problem, which isn’t immediately apparent:

The first kubectl call applies the pod spec, which includes the reference to pull the code from the GitHub repo.

The second one, which applies the deployment spec for newly minted container, pulling it from Docker Hub. There’s a ‘but’, and it’s showstopper: it assumes the first stage has completed. In the same way that kubectl apply returns immediately on the command line, the ‘build and push’ stage finishes immediately as well, long before the Kaniko build is done.

I haven’t figured out a way round this yet. The first approach I’ve looked at is to do the equivalent of the second kubectl apply from within Kaniko itself. While it supports multiple destinations, the out-of-the-box functionality appears to bind the required secrets to the supported destination types, which are in turn linked by either the secret type (for Docker Hub) or pre-canned environment variables – for GCP, AWS etc. I would need two volume mounts, one for Docker Hub, and one for the Kubernetes token for my cluster.

The second approach would be to have some sort of polling mechanism in the ‘Deploy’ stage to identify when the push to Docker Hub has completed. While it’s possible to poll an SCM service from within a declarative pipeline, I don’t see any way of adapting this for Docker Hub, particularly as the API appears to be restricted to Pro users.

A variation on this theme would be to use a separate pipeline completely for the deployment, triggered by a webhook called by Docker Hub. This looks easier but it’s not something I am going to do for the same reason I mentioned about not wanting to use GitHub webhooks: it means punching holes in my domestic firewall to some crash and burn kit.

As this is proving more difficult than it should be, I suspect that it may not be quite be using Kaniko as intended. I’ve also skirted over one issue, which is surfacing an error in the container build process, when it’s wrapped up in the application of the pod spec. This is talking about something in the same sort of hinterland, but once the shell command executes, it has no visibility on an error which, if it occurs, is actually generated inside the Kaniko pod.

I will update this post if and when I figure it out.